Generating AWS S3 presigned URL

A quick guide with Python script to generate AWS S3 presigned URL

TLDR; Summary

Key points when using S3 presigned URL

You can generate the presigned URL via AWS Management Console, AWS SDK and AWSCLI only for sharing objects (i.e. GET operation).

The presigned URL for uploading (PUT) and deleting objects (DELETE) can only be used programmatically. If not, you will get the "SignatureDoesNotMatch" error when attempting to access the URL via the browser to use it interactively.

Upon expiring, an error response will be returned as "Access Denied, Request has expired" while using an expired presigned URL.

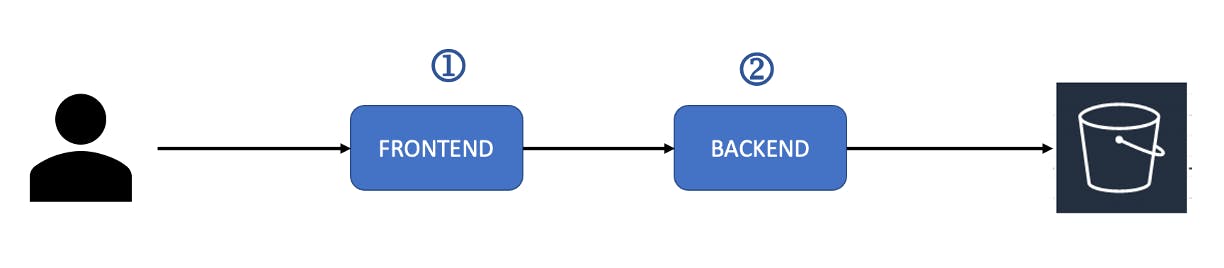

High level Use Case

A user can perform GET, PUT or DELETE operations using a frontend application

Upon receiving requests, the backend application will generate the intended presigned URL for the respective operations to interact with the S3 bucket

Full Script

You can get the full script from this GitHub repository.

Prerequisites

Ensure you have the following prerequisites to run the helper script that can be put together by following the steps below.

Target S3 Bucket exists

The user/role to generate the presigned URL has permission to do so

Access Key and Access Secret Key already configured with

aws configureor defined in~/.aws/credfile

Tech Stack

I will be using AWS SDK for Python to demonstrate GET, PUT and DELETE operations using the S3 presigned URL.

The steps mentioned below are meant to be put into a single Python file (named helper.py) for simplicity.

Putting the script together

Import Modules

The utility script will be created using the following modules.

requestsandboto3are not built-in modules. Please remember to install them withpip3 installorpip install

import argparse, sys

import requests

import boto3

Helper Class

A class named S3Helper is created to use S3 client from the boto3 library.

class S3Helper:

def __init__(self):

self.client = boto3.client('s3')

Functions under Helper Class

Generate Presigned URL

Generating the presigned url is common across the operations where the objective is to determine:

The bucket in which the bucket key (or file) is in to perform the intended client method (GET, PUT, DELETE)

Note that the URL generated is tied to 1 object

def generate_presign_url(self, bucket, bucket_key, client_method, expires_in=60):

# Common method to generate the presigned url where the operation is set via the Client Method

url = self.client.generate_presigned_url(

ClientMethod = f'{client_method}',

Params = {

'Bucket': f'{bucket}',

'Key': f'{bucket_key}'

},

ExpiresIn=f'{expires_in}'

)

return url

Upload File to S3

The function upload_file is meant for PUT operation, where the script will read the target file binary.

Using the URL, a PUT request is made to upload to S3 with the intended filename.

def upload_file(self, filepath, upload_url):

# Retrieve the target file from local machine to read the binary and upload to S3

try:

with open(filepath, 'r') as object_file:

object_text = object_file.read()

response = requests.put(upload_url, data=object_text)

print(f'PUT Operation Status Code: {response.status_code}')

except FileNotFoundError:

print(f"Couldn't find {filepath}. For a PUT operation, the key must be the name of a file that exists on your computer.")

Delete File From S3

Similarly, a DELETE request is made to delete a specific file from S3 with the generated URL.

def delete_file(self, delete_url):

# Send a request to delete the target file from S3 with generated URL

response = requests.delete(delete_url)

print(f'DELETE Operation Status Code: {response.status_code}')

Commandline Arguments

Using the built-in module argparse , the following arguments are created to take in the required inputs.

# Remove default action groups

parser = argparse.ArgumentParser()

parser._action_groups.pop()

# Define custom argument groups

required = parser.add_argument_group('Required arguments')

options = parser.add_argument_group('Options to pass when generating S3 presigned URL')

required.add_argument('-a','--action', choices=['get','put','delete'], help='Type of S3 presigned URL to generate',required=True)

required.add_argument('-b', '--bucket', help='Bucket name', required=True)

required.add_argument('-k', '--key', help='Bucket key, i.e. the filename or path to file')

options.add_argument('-e', '--expires', help='Define url to expire in seconds', default=60)

options.add_argument('-f', '--uploadfile', help='File to upload')

args = parser.parse_args()

Utility Script Logic

The remaining portion is to write the validation to determine what needs to be executed via the provided command line arguments.

try:

s3_helper = S3Helper()

url = s3_helper.generate_presign_url(args.bucket,args.key, f'{args.action}_object', args.expires)

if args.action == 'get':

if args.key is not None:

print(f'S3 Presigned URL (GET): {url}')

else:

print(f'Please provide the path (-k | --key) to retrieve the file from S3')

elif args.action == 'put':

if args.key is not None and args.uploadfile is not None:

print(f'S3 Presigned URL (PUT): {url}')

print(f'S3 Presigned URL (PUT) not meant to be used interactively')

s3_helper.upload_file(args.uploadfile, url)

else:

print(f'Please provide the file to upload (-f | --uploadfile) and (-k | --key) the name to save the file as in S3')

elif args.action == 'delete':

if args.key is not None:

print(f'S3 Presigned URL (DELETE): {url}')

print(f'S3 Presigned URL (DELETE) not meant to be used interactively')

s3_helper.delete_file(url)

else:

print(f'Please provide the path (-k | --key) to retrieve the file from S3')

except Exception as err:

print(f'Please ensure you have provided the appropriate parameters for the actions.')

print(f'Error - {type(err).__name__}:{err}\n')

sys.exit(1)

Usage

After putting the script together, you should be able to see the following arguments when using the help (-h) option

% python3 helper.py -h

usage: helper.py [-h] -a {get,put,delete} -b BUCKET [-k KEY] [-e EXPIRES] [-f UPLOADFILE]

Required arguments:

-a {get,put,delete}, --action {get,put,delete}

Type of S3 presigned URL to generate

-b BUCKET, --bucket BUCKET

Bucket name

-k KEY, --key KEY Bucket key, i.e. the filename or path to file

Options to pass when generating S3 presigned URL:

-e EXPIRES, --expires EXPIRES

Define url to expire in seconds

-f UPLOADFILE, --uploadfile UPLOADFILE

File to upload

You are now ready to use the script to test out the GET, PUT and DELETE operations of using S3 presigned URL.

Additional Details

What is mentioned above is using AWS SDK. As mentioned above, you can generate a presigned URL for GET operation via AWS Management Console as well as AWS CLI.

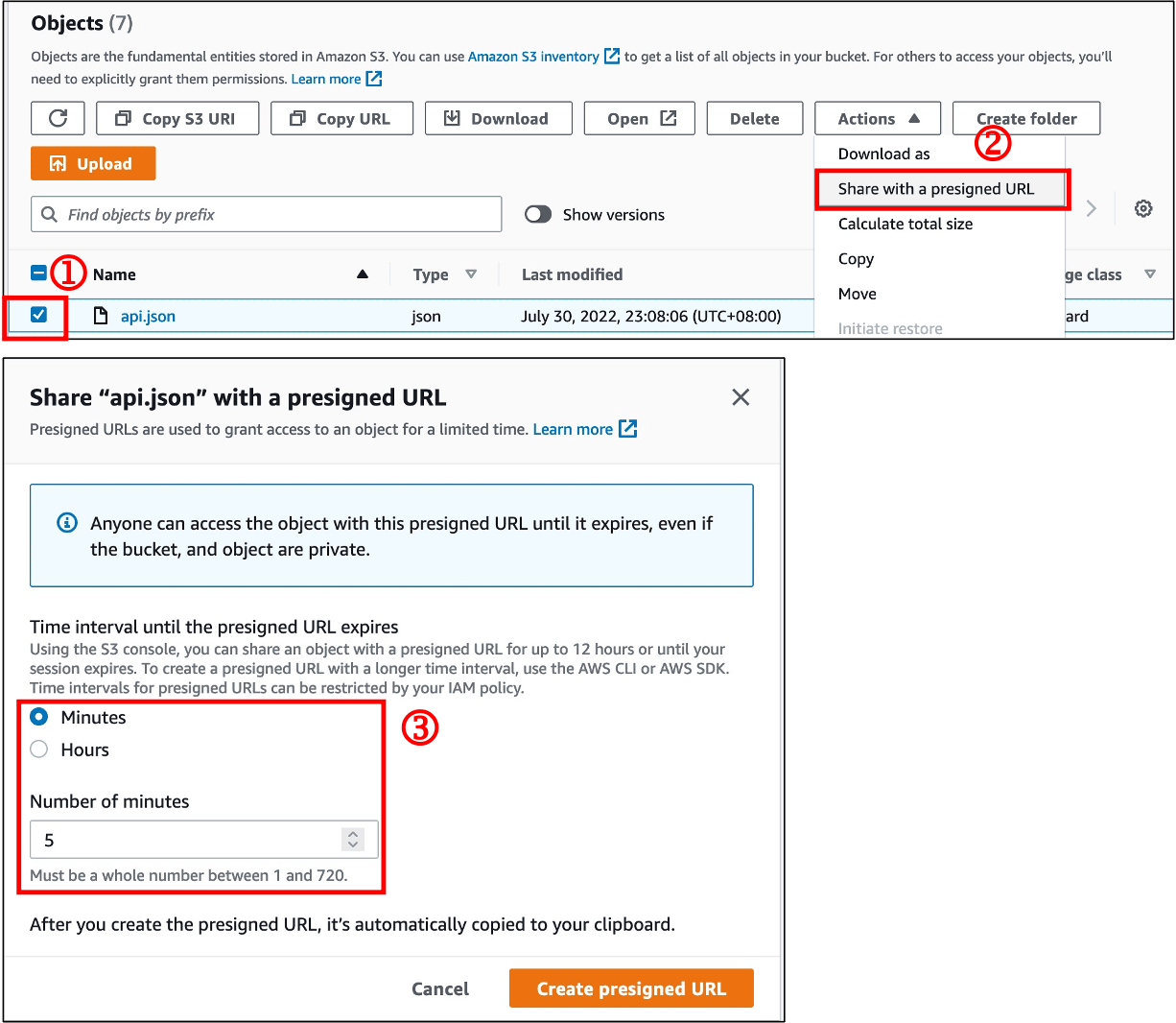

AWS Management Console

Image Annotations

Upon navigating to the intended S3 Bucket

Select the file you want to share with a presigned URL

Click on Actions > Share with a presigned URL

Define the time interval before URL expires

AWS CLI

# General Syntax:

aws s3 presign <S3_URI> --expires-in <SECONDS>

# Example:

aws s3 presign s3://sample-bucket/sample.txt --expires-in 60

Retrospective

While taking the AWS Solution Architect certification exam only focuses on the theory, it's important to put that theoretical knowledge to practice.

Initially, I thought the S3 presigned URL can be used interactively (i.e. clicking the URL will show a GUI to allow the user to upload a file). That was quite a big misconception 😬

I hope the walkthrough of putting the script together and script usage will allow you to better understand this amazing S3 feature 🙌🏼

Cheers 🍻